About

I am a Project Scientist in translational neuroengineering in the Neuroprosthetics Lab at UC Davis and BrainGate Consortium. I build neurotechnologies to enable people with nervous system injury or disease (e.g., stroke, ALS, dementia, Parkinson’s disease) to restore their lost movement and speech abilities via brain-computer interfaces (BCIs) and provide neurorehabilitation and assistive technologies. My research focuses on breaking barriers between humans and technology by developing intuitive modes of interaction with technology using brain signals, movement, and natural language. My work spans multidisciplinary areas of human-centric AI, machine learning, signal processing, time series analysis of neurophysiological signals, inertial sensors and speech signals, neural decoding, natural language processing and social robots to build neurotechnologies for healthcare. I am primarily interested in understanding brain signals and other human physiological signals to build life enabling technologies.

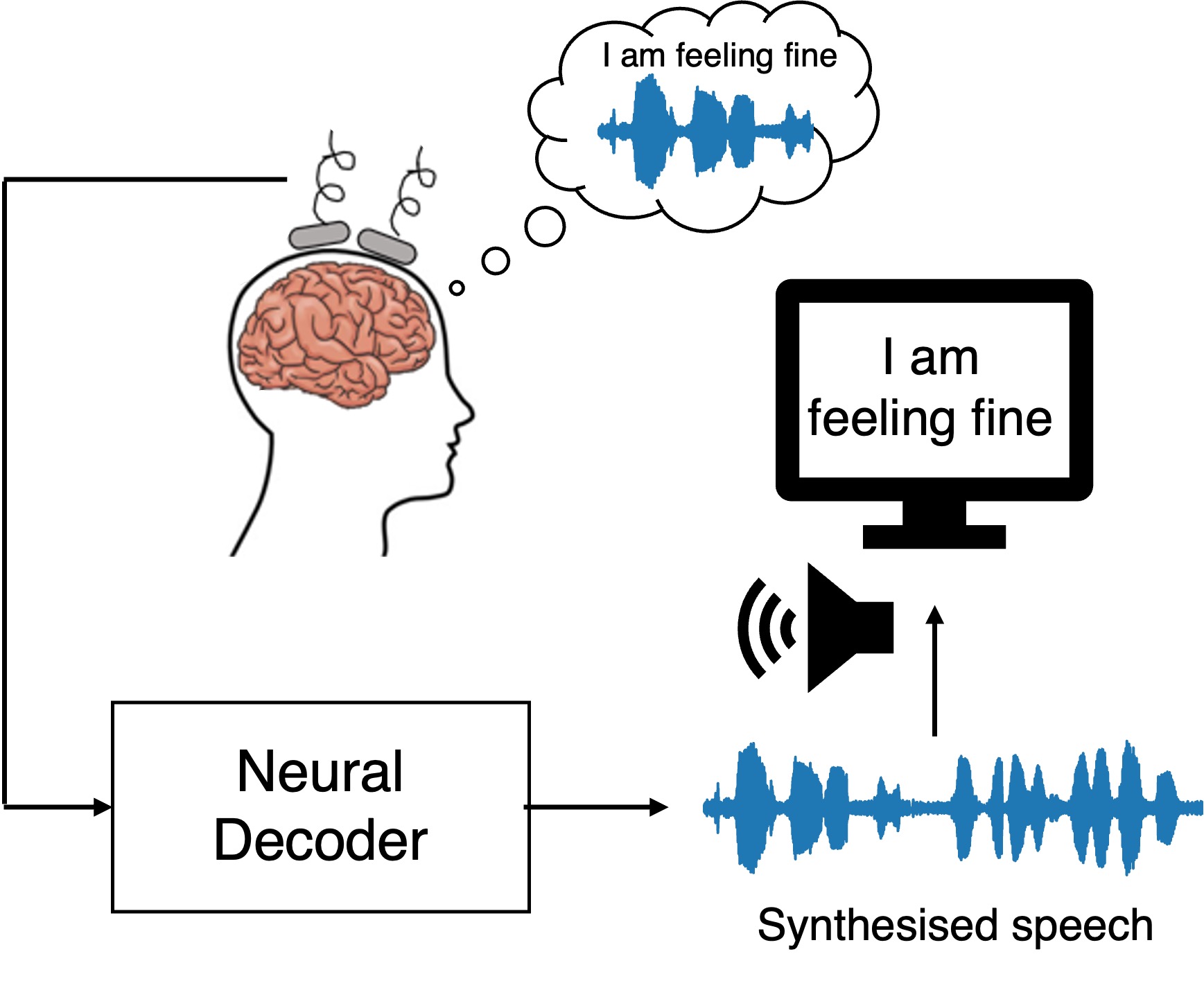

Currently, I am developing intracortical BCIs to restore lost speech to people with severe brain injury or disease by decoding their speech-related neural activity recorded using Utah multielectrode arrays implanted on brain and translating it to continuously synthesised speech. Thus, enabling people to speak using their brain signals.

Previously, I was Postdoctoral Researcher in affective robotics in Biomechatronics Lab at Imperial College London and UK Dementia Research Institute where I developed affective social robots and conversational AI to support people with dementia by improving their engagement, providing personalised interventions, and interactively assessing their health and wellbeing. I was also venture lead in MedTech SuperConnectorTM accelerator where I led the development of social robot platform - Brainbot for mental health and telemedicine.

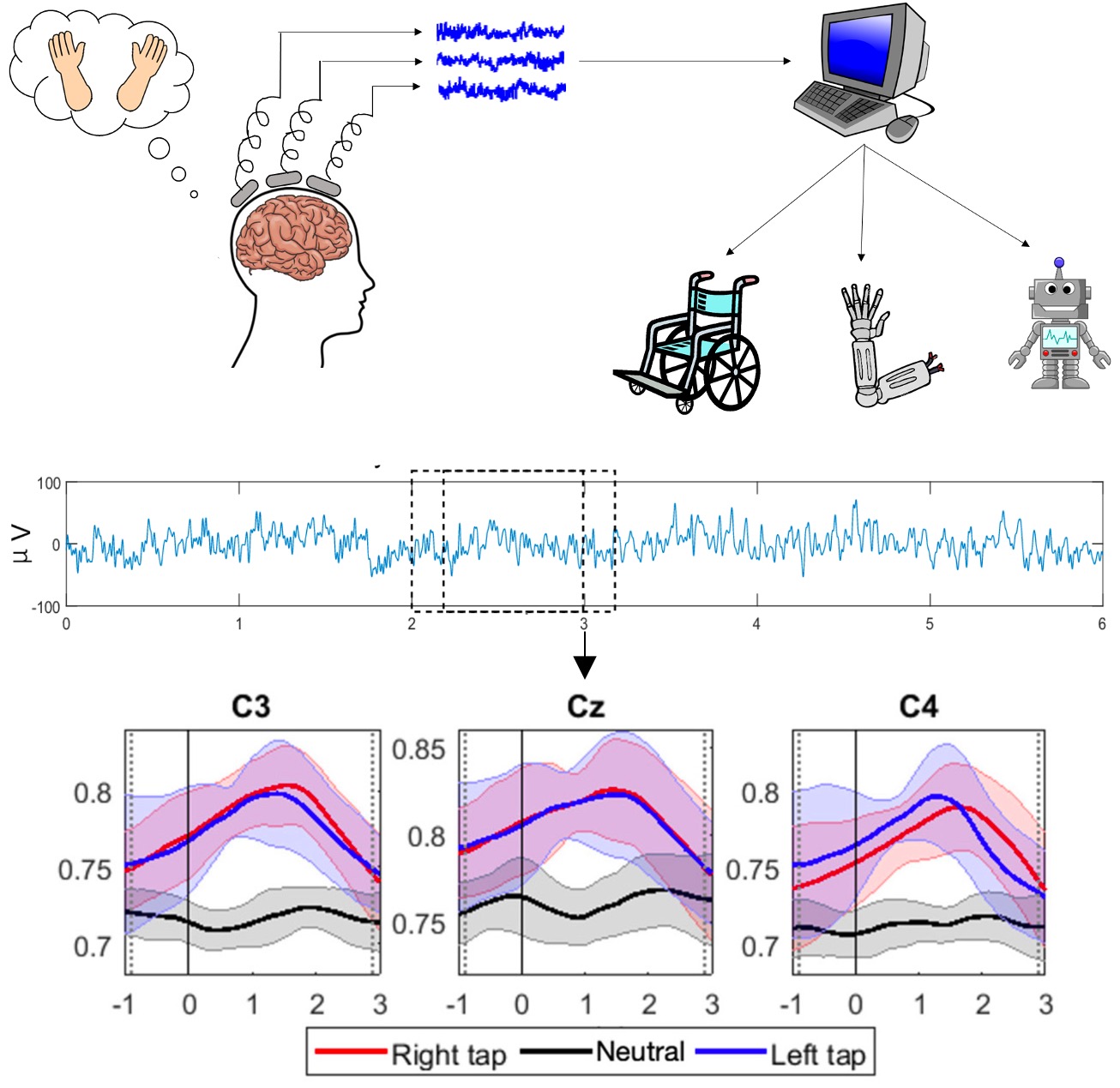

I received PhD degree in Cybernetics and MEng degree in AI and Cybernetics from the University of Reading, UK. During my PhD research in Brain Embodiment Lab, I studied changes in temporal dynamics of broadband brain signals (EEG) during voluntary movement. I developed a novel approach of modelling broadband EEG (instead of brain waves with narrow frequency bands) using non-stationary time series model to predict movement intention for motor control BCI.

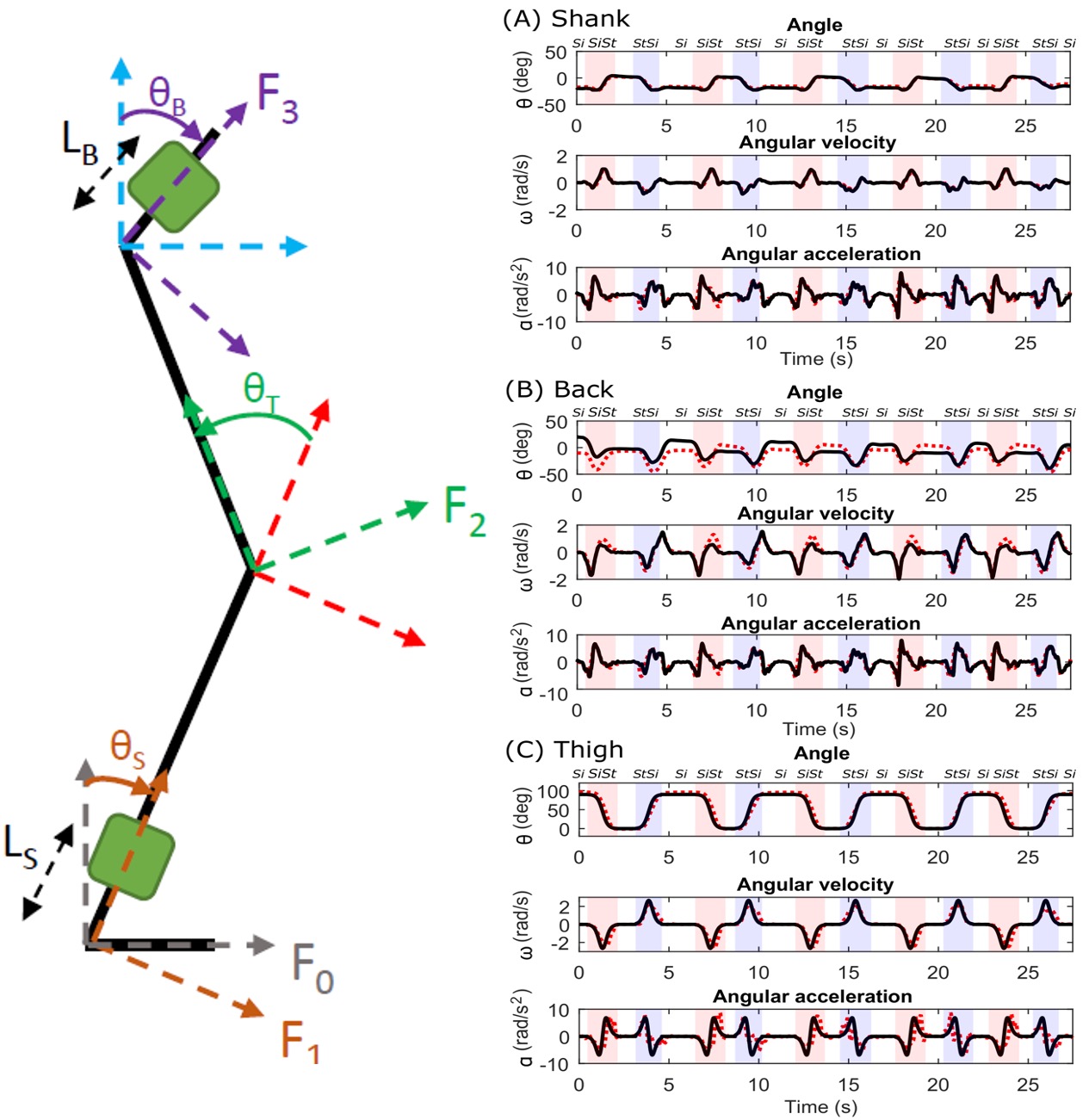

I was postgraduate research assistant at the University of Reading where I developed interactive neurorehabilitation tools providing combined motor and language therapy for stroke and brain injury in home environment. This technology was transferred for commercialisation. I was also postgraduate research assistant in the SPHERE project at the University of Reading and University of Southampton, where I worked on modelling motion kinematics and classifying movements from wearable inertial sensors for people with Parkinson's disease.

I enjoy collaborating with multidisciplinary teams of medical practitioners, patients, designers, industry experts to find technological solutions to real world health challenges. I also love to share my research with the general public via science outreach. I have presented live demos of my BCI and neurorehab tech in Science Museum London, Royal Institution, hospitals, schools, and universities. My BCI was featured in the Royal Institution Christmas Lecture.